Introduction

As the adoption of Generative AI (GenAI) models continues to surge across various industries, selecting the appropriate LLM deployment strategy has become a critical decision for businesses.

Companies that want to use powerful models like ChatGPT, Claude, or Mistral must indeed consider critical factors such as control over their data, security, latency, and regulatory compliances.

The origin of this guide takes his root into our realization that many companies are still wondering which level of deployment is sufficient to cover their needs at the right cost. In this guide, we will dive into the different levels of usage and associated deployment options for GenAI models, helping you make an informed decision tailored to your business needs.

Generative AI deployment level options

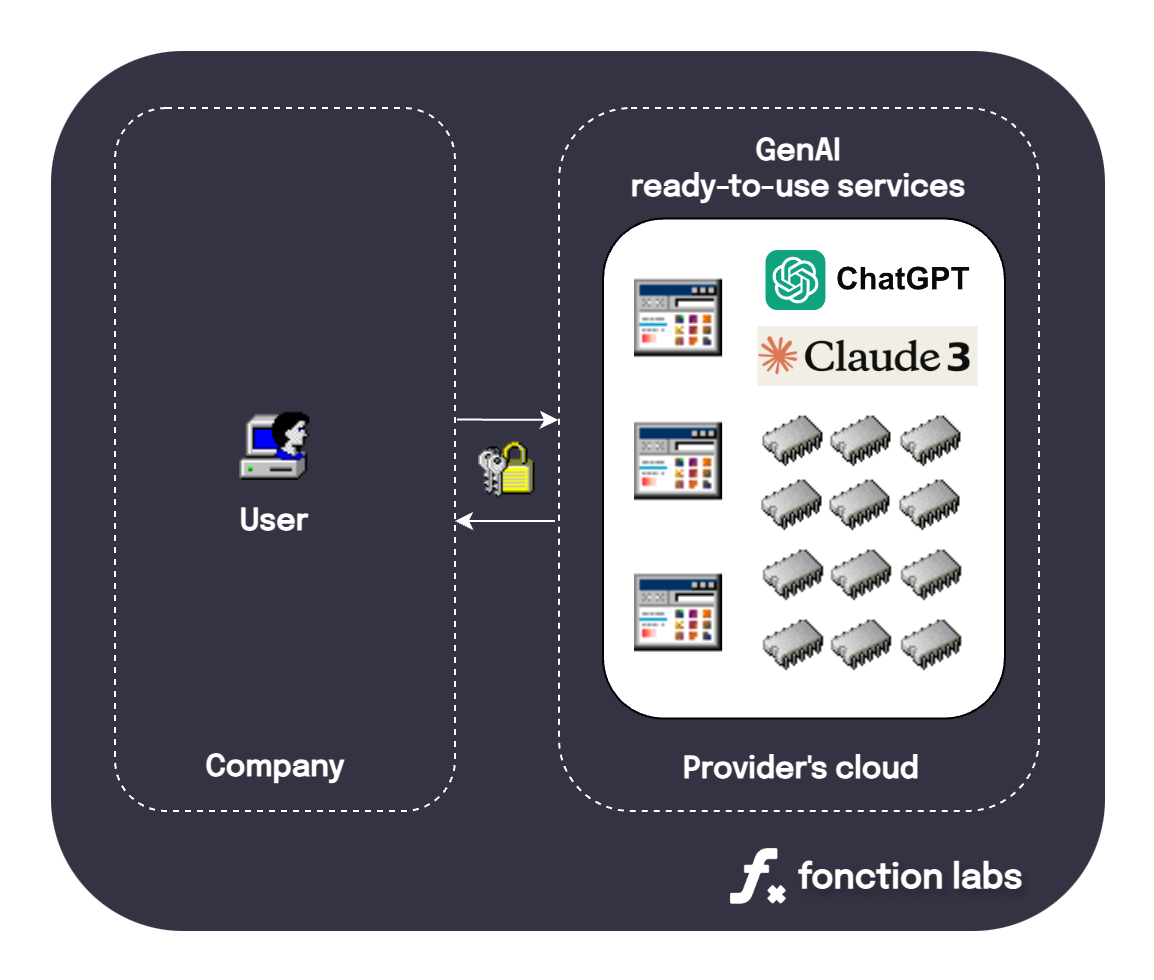

1. Plug-and-play service subscription

Plug-and-play service subscription option for GenAI

Plug-and-play service subscription option for GenAIThe best example of this level of usage and deployment is probably OpenAI’s ChatGPT, which offers a ready-to-use platform designed for immediate integration into your business operations, along with different pricing depending on the desired security and privacy features (like ChatGPT’s Enterprise).

This option is ideal for teams looking to leverage advanced AI capabilities without the need for customized and extensive technical setup or maintenance.

Key Features

- Ease of use: Quick deployment with minimal configuration.

- Scalability: Seamless usage scaling as your business grows.

- Support: Access to customer support and latest model updates.

Downsides

- Security & privacy: No full control over the exchanged and stored data, depending on the terms and conditions and your trust for the provider company (same issue as for VPNs).

- Lock-in effect: Once you pay for a subscription and have integrated the platform into your processes, migrating to another provider may be difficult.

- Low evolutivity: Handling more complex or customized usecases is way harder, or impossible.

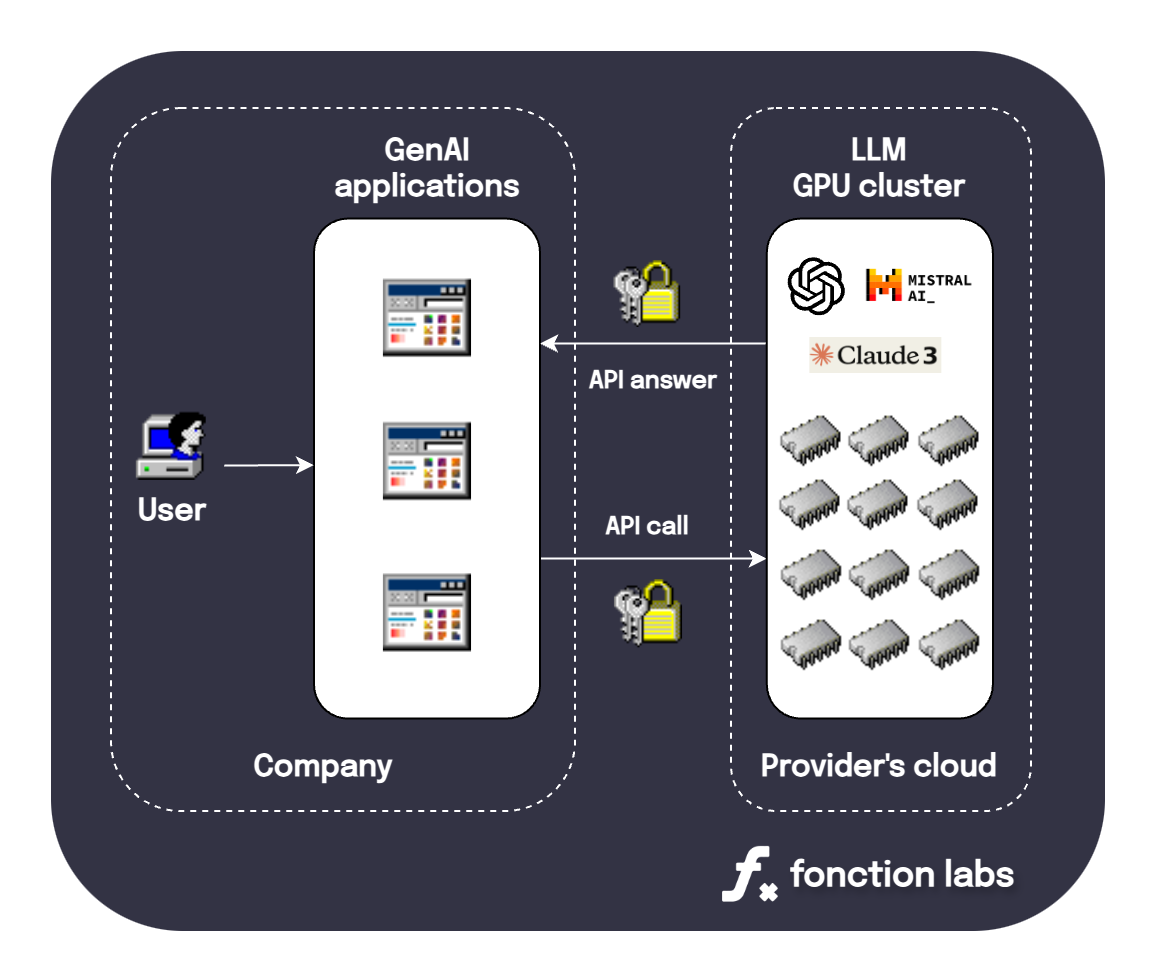

2. Pay-per-request (with APIs)

Pay-per-request API deployment option for GenAI

Pay-per-request API deployment option for GenAIUsing API services from companies like OpenAI, Mistral, or Claude provides more flexibility, as well as better cost efficiency than the subscription option. The API option allows businesses to integrate AI capabilities into their existing systems without substantial upfront investments.

In such an option, you only need to pay per LLM request sent via the API, which are performed on the provider’s cloud. Even though your data still transits from and to the provider’s servers, security policies are usually strong, and the data strongly encrypted. For instance, OpenAI states that they do not own nor use any of the customer’s input data for any training or internal usage.

Paying for an external provider API usually is the right level of usage for companies wishing to build customized projects, based on the latest and better performing models.

Key Features

- Cost efficiency: Only pay for what you use.

- Flexibility: Integrate AI into various applications and workflows, without worrying on the computation and scaling side.

- Performance: Benefit from continuous improvements and the latest model updates from the providers.

Downsides

- Higher complexity: integrating the API requires some technical knowledge compared to service subscription.

- Trust: Even if your data is secured and is not supposed to be stored, it still transits from and to the provider’s servers, hence requiring you to trust the company providing the API.

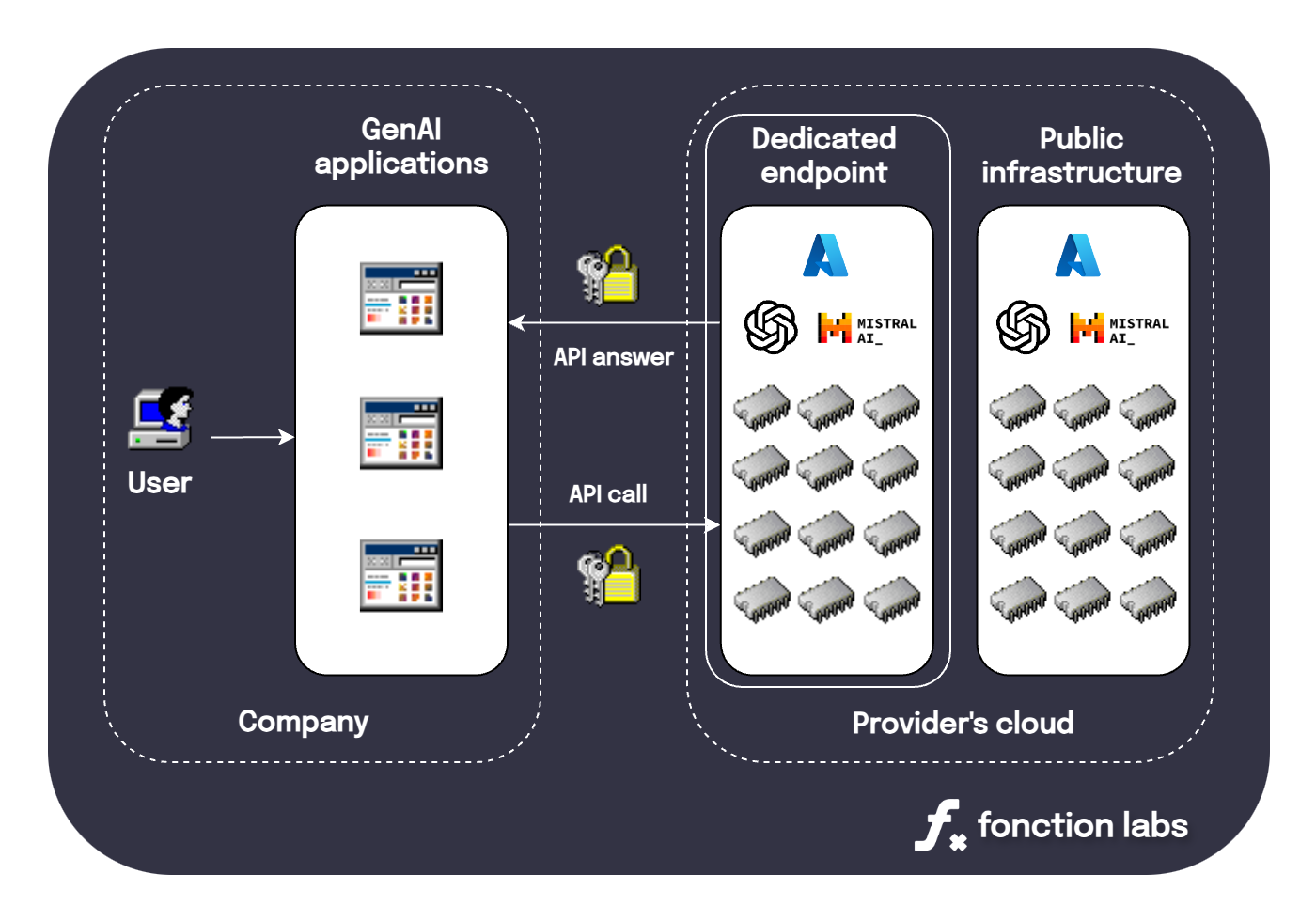

3. Dedicated Endpoint

Dedicated endpoint deployment option for GenAI

Dedicated endpoint deployment option for GenAIFor businesses requiring higher control and customization, setting-up a dedicated endpoint on a cloud provider like Azure is a viable option. This setup offers enhanced control over data handling and latency, making it suitable for industries with stringent security requirements. This can be achieved by discussing with the sale teams at OpenAI, or more easily with Mistral on Azure.

Key Features:

- Increased control: You can manage your data and models behavior more finely.

- High level of customization: Tailored deployment allows to meet more specific business needs.

- Improved security: Leverage cloud security measures and compliance certifications.

Downsides

- Higher costs: Setting-up a dedicated endpoint means continously paying for its availability (contrarily to the per-request option).

- Trust: The data still leaves your premises, and transits from and to the endpoint provider’s cloud.

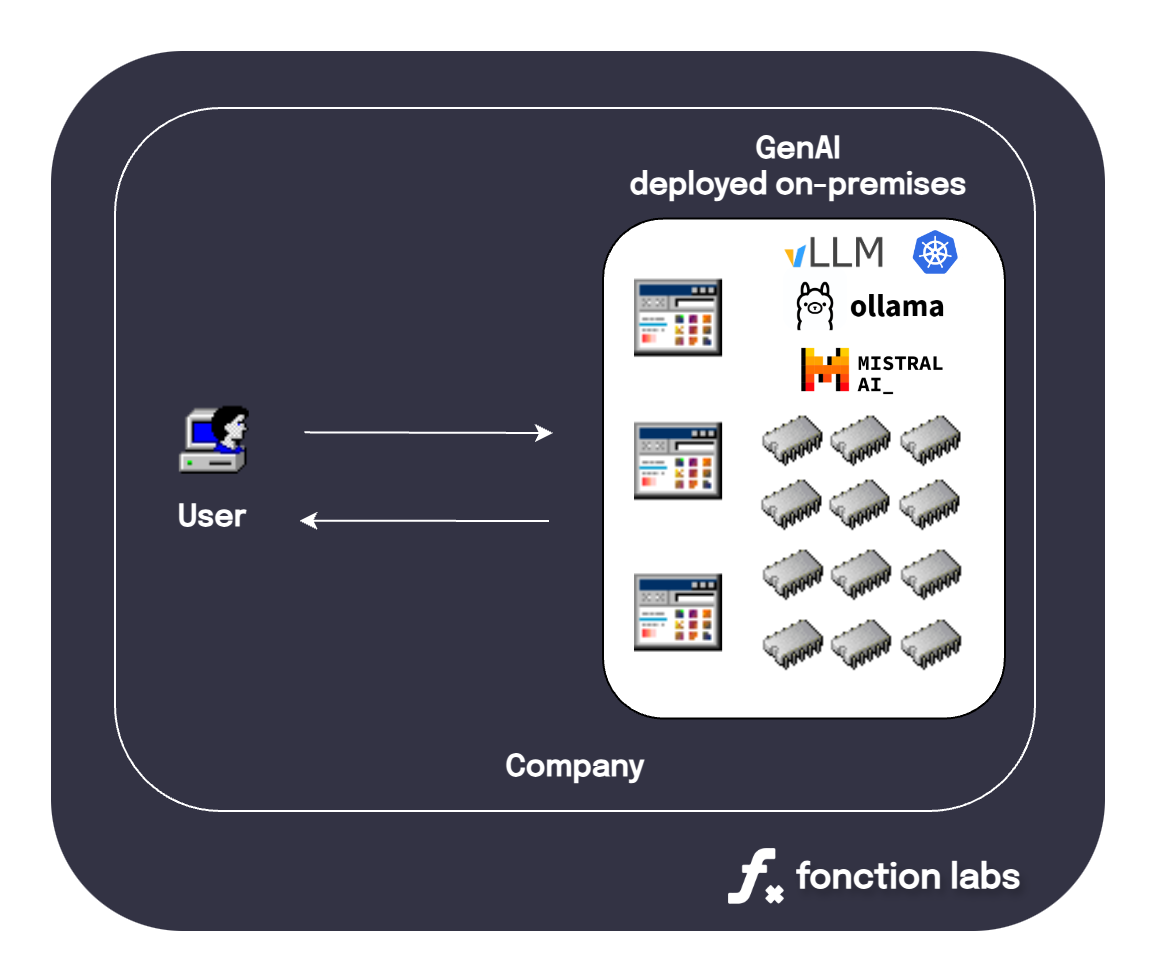

4. On-premises deployment

On-premises deployment option for GenAI

On-premises deployment option for GenAIDeploying GenAI models on your own premises provides the highest level of control, which is essential for industries with the most stringent security and compliance requirements, such as the military or healthcare.

This option ensures that the data remains within the organization’s infrastructure, without having to subcontract the security to the model provider. This level of control can consequently only be achieved with open sources models, like Llama3 or Mixtral, using frameworks like vLLM. This option also requires a high level of expertise to parallelize and scale the model usage and ensure low latency.

Key Features

- Maximum security: Complete control over your data and model security.

- Better regulatory compliance: On-premises meets the strictest industry regulations.

- Customization and control: Full access to the model parameters for performance tuning.

Downsides

- Higher infrastructure and maintenance costs: Setting-up LLMs in an on-premises fashion is more costly and requires higher maintenance workload.

- Higher complexity: Setting-up and scaling on-premises GenAI models is a difficult topic that requires a high level of expertise.

- Limitation to open-source models: Setting-up models on your own servers means restricting yourself to open-source models, which are (as of the present date) not as performant as proprietary models, like the ones from Claude and OpenAI.

Security in Generative AI deployments

When deploying LLMs, securing your data is paramount. OpenAI, for instance, emphasizes that they do not train their models on business data from the ChatGPT Team, ChatGPT Enterprise services, as well as from their API platform. Furthermore, businesses retain ownership of their inputs and outputs by controlling data retention periods.

However, trust still remains a critical factor when relying on third-party providers.

Here are what we think should be the key security considerations for your business:

- Encryption

Encrypting the data ensures that sensitive information remains protected while transiting from and to a third-party provider server, protecting from MitM (man-in-the-middle) attacks.

- Data ownership

Keeping legal ownership of your data ensures that it is not used for other purposes than yours, like training a model or being sold for advertising and marketing.

- Retention control

Controlling data retention lets you decide how long your data stays on the provider’s servers.

For industries requiring better control, dedicated endpoints or on-premise deployments offer greater security by keeping data within a controlled environment, reducing reliance on external entities.

Making the right choices

Choosing the appropriate deployment strategy depends on several factors, including regulatory requirements, industry-specific needs, and business priorities.

Here is a brief guideline to help you decide:

- Ease of use and quick deployment: opt for ready-to-use services, like ChatGPT.

- Cost efficiency and flexibility: use an API from a provider.

- Enhanced control and customization: set-up a dedicated endpoint on cloud providers.

- Maximum security and regulatory compliance: deploy open-source models on-premise.

Conclusion

Selecting the right deployment strategy for GenAI models is crucial for maximizing their potential while ensuring data security and compliance. By understanding the different levels of usage and deployment, businesses can make informed decisions that align with their specific needs and regulatory environment.

Whether you choose the convenience of ready-to-use services, the flexibility of APIs, the higher control of dedicated endpoints, or the security of on-premise deployments, the key is to evaluate your requirements and pick the option that best fits your organizational goals.

At Fonction Labs, we can help you choose, build, and deploy the right solution for you.