The issue with prompt engineering

As businesses rush to integrate Large Language Models (LLMs) into their workflows, a variety of implementation patterns have emerged. So far, much of the focus has centered on prompt engineering —crafting the right input to get the desired output.

But is that all there is to solving business problems with LLMs?

Prompt engineering is certainly useful, but it’s far from the full picture. In practice, it’s just one piece of a larger puzzle. For example, if you use LLM-based chatbots to help draft emails, you’ve probably noticed that you rarely use the same prompt twice. Context matters, and static prompts often fall short.

And here’s the real question: if you’re constantly spending time writing and tweaking prompts, are you truly automating the task? Or have you simply shifted the burden one level up—from doing the task (like writing emails) to describing how to do the task (through prompts)? The result is a kind of “meta-work” that still demands significant human input.

So the core challenge becomes: is there a way to fully solve complex tasks without having to repeatedly customize prompts?

The answer lies in working with workflows and agents, which we will discuss further in this article.

The shift - from prompting to orchestrating

A slightly more structured —but still relatively simple— approach than pure prompting involves breaking complex tasks into smaller, well-defined subtasks. These are then handled through deterministic chains of prompts, forming a repeatable process.

For instance, if you’re building a document summarizer, you might design a workflow like:

- Retrieve relevant documents (e.g., using RAG),

- Chunk them intelligently,

- Summarize with an LLM,

- Return the final answer.

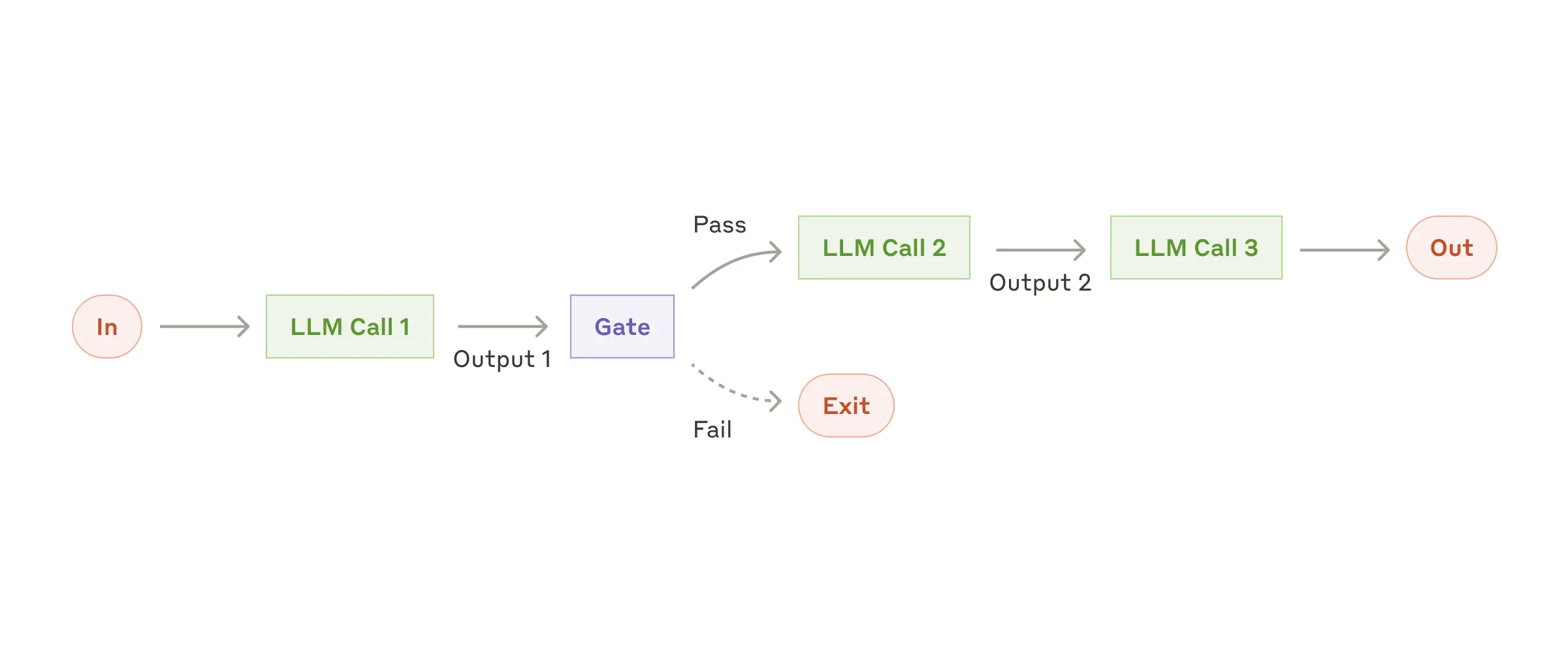

Example of a deterministic workflow (chain-of-prompts)

Example of a deterministic workflow (chain-of-prompts)(courtesy of Anthropic)

These systems are structured, predictable, and work well when the steps are known in advance and stable over time. Those are commonly referred to as deterministic workflows, or chains of prompts.

But what if you can’t predefine the steps? Imagine a scenario where the problem space is too broad, the path to the answer uncertain, or the criteria inherently subjective. For example, assisting a customer support agent in responding to complex inquiries, or analyzing ambiguous payment behavior for fraud.

In such cases, you need something more adaptive: an AI agent.

AI agents vs. deterministic workflows

You may have heard that “2025 is the year AI agents go mainstream”. You may also have heard that, unlike basic chatbots, AI agents can reason, adapt, and coordinate multiple tasks with human-like fluency. It sounds like magic.

But how do tech-savvy AI professionals actually describe agents?

OpenAI defines agents as:

systems that independently accomplish tasks on your behalf.

In contrast, deterministic workflows are:

predefined sequences of steps that must be executed to meet the user’s goal.

Put differently, an AI agent autonomously plans its steps and execution in real time, while the steps of a deterministic workflow are 100% defined in advance by the user or AI engineer.

For example, the document summarizer design detailed in the section above is a good example of what a deterministic workflow may look like. The steps required to meet the user’s goal are clear, and defined by the AI engineer or user when designing the worfklow.

So, what truly makes an AI agent?

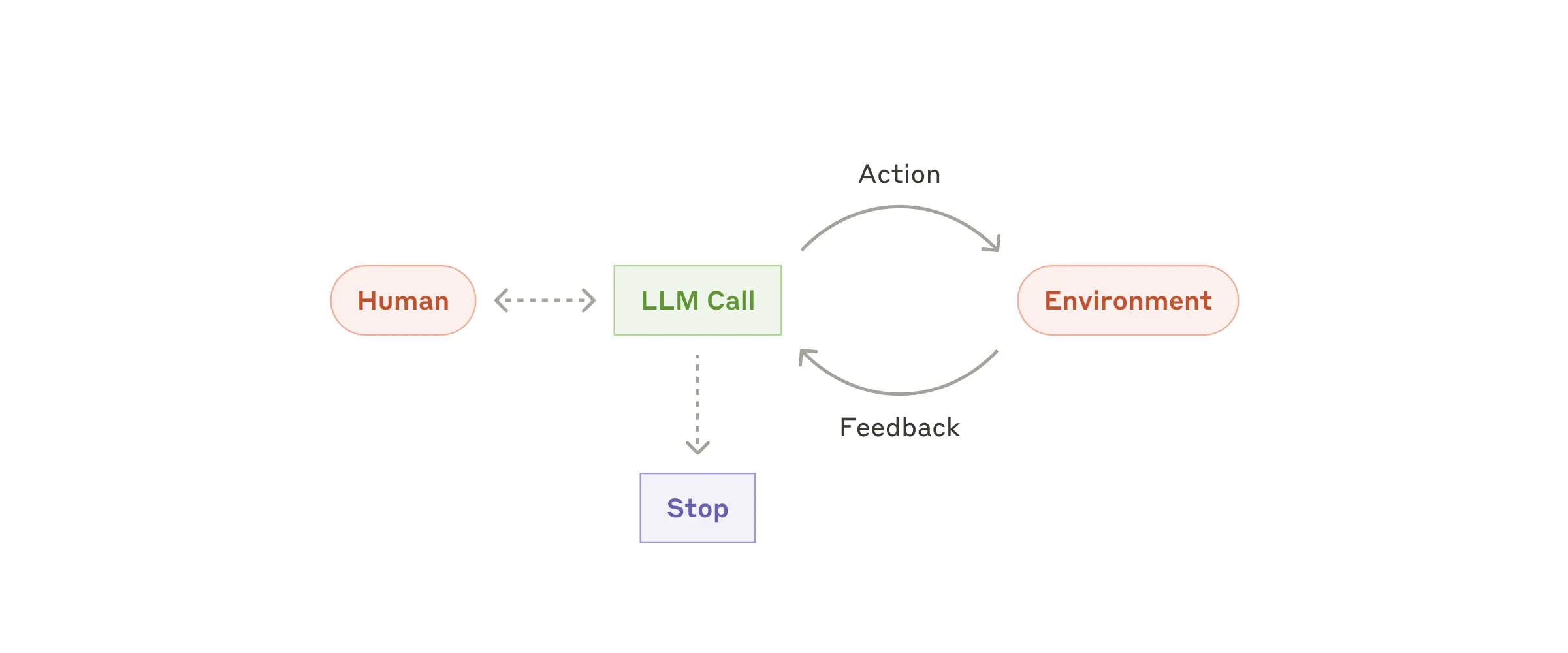

Example of an autonomous agent

Example of an autonomous agent(courtesy of Anthropic)

An agent has 2 essential core capabilities that allows it to act reliably and consistently on behalf of a user:

-

LLM-guided - Agents use LLM to dynamically generate workflows and take active decisions. They determine when workflows are complete, and can correct their own course or hand control back to the user when needed.

-

Environment-augmented - Agents can use tools (like APIs, databases, browsers) to act (outflow) and gather information (inflow). They choose tools dynamically, adapting to the current step in the workflow —all within guardrails that maintain safety and control.

Why not just use deterministic systems?

Deterministic systems, while powerful, struggle with ambiguity and complexity. They work best when logic and rules can be clearly defined: “if X, do Y”. But many real-world tasks defy such strict logic and require more nuance.

Take payment fraud detection:

- Traditional rules engines are like checklists: they flag transactions based on known thresholds (e.g. “flag if amount > $10,000”), or specific situations.

- Agents behave more like experienced investigators: weighing subtle signals, reasoning through incomplete data, and adapting strategies over time.

Agents shine when decision-making must account for context, nuance, or evolving criteria.

When should you build an agent?

Not every use case requires an agent.

Before building one, validate your use case against these three criteria:

1. Complex decision-making

If your task involves subjective judgments or decisions that may change based on context, agents are a better fit.

Examples:

- Broad-searching for high-quality research papers on an evolving topic, not knowing the sources to use in advance.

- Deciding whether to approve a refund for a customer based on account history, tone of voice, and prior interactions.

- Assessing eligibility for financial aid in education based on a mix of qualitative and quantitative inputs.

- Handling edge-case exceptions in insurance claims that don’t match any fixed rule.

2. Difficult-to-maintain rules

When rules change frequently or are too vague or flexible, agents help reduce complexity.

Examples:

- Responding to compliance queries that vary by region and evolve over time.

- Managing legal hold procedures that depend on evolving internal policies and external regulations.

- Enforcing dynamic pricing rules across regions and promotions in an e-commerce platform.

3. Reliance on unstructured data

If your input data is mostly natural language or mixed formats (text, voice transcripts, semi-structured logs), LLM-based agents can reason and extract meaning where traditional systems falter.

Examples:

- Processing incoming legal documents to identify obligations and deadlines.

- Extracting relevant details from handwritten or scanned doctor’s notes for patient records.

- Responding to HR requests that come in through varied formats like Slack messages, emails, or voice notes.

Conclusion

Agents represent a shift in how we build automation: from rigid, rule-based processes to systems that reason, adapt, and act on their own.

Agents don’t just automate tasks —they automate thinking and action across workflows.

They are best suited for multi-step, or even multi-tasks workflows that involve:

- Ambiguous decisions

- Rules that can’t be easily coded

- Unstructured inputs

If your organization is exploring agents or ready to deploy one, our team can help with expert guidance, prototyping, and production support. Reach out—let’s build intelligent automation that drives real results.